How do I select by partial string from a pandas DataFrame?

This post is meant for readers who want to

- search for a substring in a string column (the simplest case) as in

df1[df1['col'].str.contains(r'foo(?!$)')] - search for multiple substrings (similar to

isin), e.g., withdf4[df4['col'].str.contains(r'foo|baz')] - match a whole word from text (e.g., “blue” should match “the sky is blue” but not “bluejay”), e.g., with

df3[df3['col'].str.contains(r'bblueb')] - match multiple whole words

- Understand the reason behind “ValueError: cannot index with vector containing NA / NaN values” and correct it with

str.contains('pattern',na=False)

…and would like to know more about what methods should be preferred over others.

(P.S.: I’ve seen a lot of questions on similar topics, I thought it would be good to leave this here.)

Friendly disclaimer, this is post is long.

Basic Substring Search

# setup

df1 = pd.DataFrame({'col': ['foo', 'foobar', 'bar', 'baz']})

df1

col

0 foo

1 foobar

2 bar

3 baz

str.contains can be used to perform either substring searches or regex based search. The search defaults to regex-based unless you explicitly disable it.

Here is an example of regex-based search,

# find rows in `df1` which contain "foo" followed by something

df1[df1['col'].str.contains(r'foo(?!$)')]

col

1 foobar

Sometimes regex search is not required, so specify regex=False to disable it.

#select all rows containing "foo"

df1[df1['col'].str.contains('foo', regex=False)]

# same as df1[df1['col'].str.contains('foo')] but faster.

col

0 foo

1 foobar

Performance wise, regex search is slower than substring search:

df2 = pd.concat([df1] * 1000, ignore_index=True)

%timeit df2[df2['col'].str.contains('foo')]

%timeit df2[df2['col'].str.contains('foo', regex=False)]

6.31 ms ± 126 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

2.8 ms ± 241 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

Avoid using regex-based search if you don’t need it.

Addressing ValueErrors

Sometimes, performing a substring search and filtering on the result will result in

ValueError: cannot index with vector containing NA / NaN values

This is usually because of mixed data or NaNs in your object column,

s = pd.Series(['foo', 'foobar', np.nan, 'bar', 'baz', 123])

s.str.contains('foo|bar')

0 True

1 True

2 NaN

3 True

4 False

5 NaN

dtype: object

s[s.str.contains('foo|bar')]

# ---------------------------------------------------------------------------

# ValueError Traceback (most recent call last)

Anything that is not a string cannot have string methods applied on it, so the result is NaN (naturally). In this case, specify na=False to ignore non-string data,

s.str.contains('foo|bar', na=False)

0 True

1 True

2 False

3 True

4 False

5 False

dtype: bool

How do I apply this to multiple columns at once?

The answer is in the question. Use DataFrame.apply:

# `axis=1` tells `apply` to apply the lambda function column-wise.

df.apply(lambda col: col.str.contains('foo|bar', na=False), axis=1)

A B

0 True True

1 True False

2 False True

3 True False

4 False False

5 False False

All of the solutions below can be “applied” to multiple columns using the column-wise apply method (which is OK in my book, as long as you don’t have too many columns).

If you have a DataFrame with mixed columns and want to select only the object/string columns, take a look at select_dtypes.

Multiple Substring Search

This is most easily achieved through a regex search using the regex OR pipe.

# Slightly modified example.

df4 = pd.DataFrame({'col': ['foo abc', 'foobar xyz', 'bar32', 'baz 45']})

df4

col

0 foo abc

1 foobar xyz

2 bar32

3 baz 45

df4[df4['col'].str.contains(r'foo|baz')]

col

0 foo abc

1 foobar xyz

3 baz 45

You can also create a list of terms, then join them:

terms = ['foo', 'baz']

df4[df4['col'].str.contains('|'.join(terms))]

col

0 foo abc

1 foobar xyz

3 baz 45

Sometimes, it is wise to escape your terms in case they have characters that can be interpreted as regex metacharacters. If your terms contain any of the following characters…

. ^ $ * + ? { } [ ] | ( )

Then, you’ll need to use re.escape to escape them:

import re

df4[df4['col'].str.contains('|'.join(map(re.escape, terms)))]

col

0 foo abc

1 foobar xyz

3 baz 45

re.escape has the effect of escaping the special characters so they’re treated literally.

re.escape(r'.foo^')

# '\.foo\^'

Matching Entire Word(s)

By default, the substring search searches for the specified substring/pattern regardless of whether it is full word or not. To only match full words, we will need to make use of regular expressions here—in particular, our pattern will need to specify word boundaries (b).

For example,

df3 = pd.DataFrame({'col': ['the sky is blue', 'bluejay by the window']})

df3

col

0 the sky is blue

1 bluejay by the window

Now consider,

df3[df3['col'].str.contains('blue')]

col

0 the sky is blue

1 bluejay by the window

v/s

df3[df3['col'].str.contains(r'bblueb')]

col

0 the sky is blue

Multiple Whole Word Search

Similar to the above, except we add a word boundary (b) to the joined pattern.

p = r'b(?:{})b'.format('|'.join(map(re.escape, terms)))

df4[df4['col'].str.contains(p)]

col

0 foo abc

3 baz 45

Where p looks like this,

p

# '\b(?:foo|baz)\b'

A Great Alternative: Use List Comprehensions!

Because you can! And you should! They are usually a little bit faster than string methods, because string methods are hard to vectorise and usually have loopy implementations.

Instead of,

df1[df1['col'].str.contains('foo', regex=False)]

Use the in operator inside a list comp,

df1[['foo' in x for x in df1['col']]]

col

0 foo abc

1 foobar

Instead of,

regex_pattern = r'foo(?!$)'

df1[df1['col'].str.contains(regex_pattern)]

Use re.compile (to cache your regex) + Pattern.search inside a list comp,

p = re.compile(regex_pattern, flags=re.IGNORECASE)

df1[[bool(p.search(x)) for x in df1['col']]]

col

1 foobar

If “col” has NaNs, then instead of

df1[df1['col'].str.contains(regex_pattern, na=False)]

Use,

def try_search(p, x):

try:

return bool(p.search(x))

except TypeError:

return False

p = re.compile(regex_pattern)

df1[[try_search(p, x) for x in df1['col']]]

col

1 foobar

More Options for Partial String Matching: np.char.find, np.vectorize, DataFrame.query.

In addition to str.contains and list comprehensions, you can also use the following alternatives.

np.char.find

Supports substring searches (read: no regex) only.

df4[np.char.find(df4['col'].values.astype(str), 'foo') > -1]

col

0 foo abc

1 foobar xyz

np.vectorize

This is a wrapper around a loop, but with lesser overhead than most pandas str methods.

f = np.vectorize(lambda haystack, needle: needle in haystack)

f(df1['col'], 'foo')

# array([ True, True, False, False])

df1[f(df1['col'], 'foo')]

col

0 foo abc

1 foobar

Regex solutions possible:

regex_pattern = r'foo(?!$)'

p = re.compile(regex_pattern)

f = np.vectorize(lambda x: pd.notna(x) and bool(p.search(x)))

df1[f(df1['col'])]

col

1 foobar

DataFrame.query

Supports string methods through the python engine. This offers no visible performance benefits, but is nonetheless useful to know if you need to dynamically generate your queries.

df1.query('col.str.contains("foo")', engine='python')

col

0 foo

1 foobar

More information on query and eval family of methods can be found at Dynamically evaluate an expression from a formula in Pandas.

Recommended Usage Precedence

- (First)

str.contains, for its simplicity and ease handling NaNs and mixed data - List comprehensions, for its performance (especially if your data is purely strings)

np.vectorize- (Last)

df.query

Python is a great language for doing data analysis, primarily because of the fantastic ecosystem of data-centric python packages. Pandas is one of those packages and makes importing and analyzing data much easier.

Pandas str.find() method is used to search a substring in each string present in a series. If the string is found, it returns the lowest index of its occurrence. If string is not found, it will return -1.

Start and end points can also be passed to search a specific part of string for the passed character or substring.

Syntax: Series.str.find(sub, start=0, end=None)

Parameters:

sub: String or character to be searched in the text value in series

start: int value, start point of searching. Default is 0 which means from the beginning of string

end: int value, end point where the search needs to be stopped. Default is None.

Return type: Series with index position of substring occurrence

To download the CSV used in code, click here.

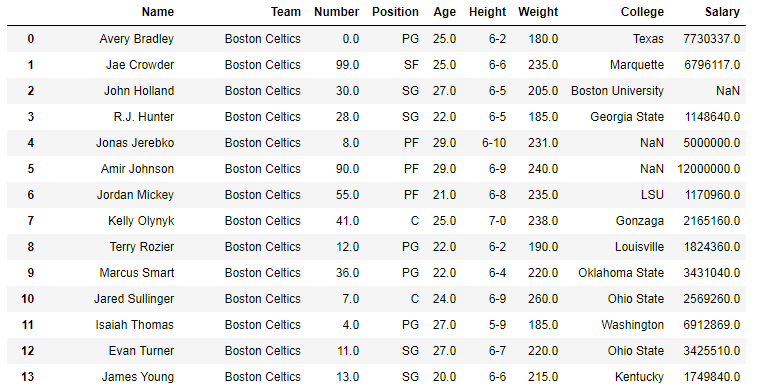

In the following examples, the data frame used contains data of some NBA players. The image of data frame before any operations is attached below.

Example #1: Finding single character

In this example, a single character ‘a’ is searched in each string of Name column using str.find() method. Start and end parameters are kept default. The returned series is stored in a new column so that the indexes can be compared by looking directly. Before applying this method, null rows are dropped using .dropna() to avoid errors.

Python3

Output:

As shown in the output image, the occurrence of index in the Indexes column is equal to the position first occurrence of character in the string. If the substring doesn’t exist in the text, -1 is returned. It can also be seen by looking at the first row itself that ‘A’ wasn’t considered which proves this method is case sensitive.

Example #2: Searching substring (More than one character)

In this example, ‘er’ substring will be searched in the Name column of data frame. The start parameter is kept 2 to start search from 3rd(index position 2) element.

Python3

Output:

As shown in the output image, the last index of occurrence of substring is returned. But it can be seen, in case of Terry Rozier(Row 9 in data frame), instead of first occurrence of ‘er’, 10 was returned. This is because the start parameter was kept 2 and the first ‘er’ occurs before that.

Last Updated :

18 Jan, 2023

Like Article

Save Article

In this tutorial, we will look at how to search for a string (or a substring) in a pandas dataframe column with the help of some examples.

How to check if a pandas series contains a string?

You can use the pandas.series.str.contains() function to search for the presence of a string in a pandas series (or column of a dataframe). You can also pass a regex to check for more custom patterns in the series values. The following is the syntax:

# usnig pd.Series.str.contains() function with default parameters

df['Col'].str.contains("string_or_pattern", case=True, flags=0, na=None, regex=True)

It returns a boolean Series or Index based on whether a given pattern or regex is contained within a string of a Series or Index.

The case parameter tells whether to match the string in a case-sensitive manner or not.

The regex parameter tells the function that you want to match for a specific regex pattern.

The flags parameter can be used to pass additional flags for the regex match through to the re module (for example re.IGNORECASE)

Let’s look at some examples to see the above syntax in action

Pass the string you want to check for as an argument.

import pandas as pd

# create a pandas series

players = pd.Series(['Rahul Dravid', 'Yuvraj Singh', 'Sachin Tendulkar', 'Mahendra Singh Dhoni', 'Virat Kohli'])

# names with 'Singh'

print(players.str.contains('Singh', regex=False))

Output:

0 False 1 True 2 False 3 True 4 False dtype: bool

Here, we created a pandas series containing names of some India’s top cricketers. We then find the names containing the word “Singh” using the str.contains() function. We also pass regex=False to indicate not to assume the passed value as a regex pattern. In this case, you can also go with the default regex=True as it would not make any difference.

Also note that we get the result as a pandas series of boolean values representing which of the values contained the given string. You can use this series to filter values in the original series.

For example, let’s only print out the names containing the word “Singh”

# display the type

type(players.str.contains('Singh'))

# filter for names containing 'Singh'

print(players[players.str.contains('Singh')])

Output:

1 Yuvraj Singh 3 Mahendra Singh Dhoni dtype: object

Here we applied the .str.contains() function on a pandas series. Note that you can also apply it on individual columns of a pandas dataframe.

# create a dataframe

df = pd.DataFrame({

'Name': ['Rahul Dravid', 'Yuvraj Singh', 'Sachin Tendulkar', 'Mahendra Singh Dhoni', 'Virat Kohli'],

'IPL Team': ['RR', 'KXIP', 'MI', 'CSK', 'RCB']

})

# filter for names that have "Singh"

print(df[df['Name'].str.contains('Singh', regex=False)])

Output:

Name IPL Team 1 Yuvraj Singh KXIP 3 Mahendra Singh Dhoni CSK

Search for string irrespective of case

By default, the pd.series.str.contains() function’s string searches are case sensitive.

# create a pandas series

players = pd.Series(['Rahul Dravid', 'yuvraj singh', 'Sachin Tendulkar', 'Mahendra Singh Dhoni', 'Virat Kohli'])

# names with 'Singh' irrespective of case

print(players.str.contains('Singh', regex=False))

Output:

0 False 1 False 2 False 3 True 4 False dtype: bool

We get False for “yuvraj singh” because it does not contain the word “Singh” in the same case.

You can, however make the function search for strings irrespective of the case by passing False to the case parameter.

# create a pandas series

players = pd.Series(['Rahul Dravid', 'yuvraj singh', 'Sachin Tendulkar', 'Mahendra Singh Dhoni', 'Virat Kohli'])

# names with 'Singh' irrespective of case

print(players.str.contains('Singh', regex=False, case=False))

Output:

0 False 1 True 2 False 3 True 4 False dtype: bool

Search for a matching regex pattern in column

You can also pass regex patterns to the above function for searching more complex values/patterns in the series.

# create a pandas series

balls = pd.Series(['wide', 'no ball', 'wicket', 'dot ball', 'runs'])

# check for wickets or dot balls

good_balls = balls.str.contains('wicket|dot ball', regex=True)

# display good balls

print(good_balls)

Output:

0 False 1 False 2 True 3 True 4 False dtype: bool

Here we created a pandas series with values representing different outcomes when a blower bowls a ball in cricket. Let’s say we want to find all the good balls which can be defined as either a wicket or a dot ball. We used the regex pattern 'wicket|dot ball' to match with either “wicket” or “dot ball”.

You can similarly write more complex regex patterns depending on your use-case to match values in a pandas series.

For more the pd.Series.str.contains() function, refer to its documentation.

With this, we come to the end of this tutorial. The code examples and results presented in this tutorial have been implemented in a Jupyter Notebook with a python (version 3.8.3) kernel having pandas version 1.0.5

Subscribe to our newsletter for more informative guides and tutorials.

We do not spam and you can opt out any time.

-

Piyush is a data professional passionate about using data to understand things better and make informed decisions. He has experience working as a Data Scientist in the consulting domain and holds an engineering degree from IIT Roorkee. His hobbies include watching cricket, reading, and working on side projects.

View all posts

Pandas Find – pd.Series.str.find()

If you’re looking for information on how to find data or cell within a Pandas DataFrame or Series, check out a future post – Locating Data Within A DataFrame. This post will be around finding substrings within a series of strings.

Often times you may want to know where a substring exists in a bigger string. You could be trying to extract an address, remove a piece of text, or simply wanting to find the first instance of a substring.

Pandas.Series.Str.Find() helps you locate substrings within larger strings. This has the identical functionality as =find() in Excel or Google Sheets.

Example: “day” is a substring within “Monday.” However, “day” is not a substring of “November,” since “day” does not appear in “November”

Pseudo code: “Monday”.find(“day”) returns 4. “day” starts at the 4th character in “Monday”

But first, what is a string and substring?

- String = Data type within python that represents text

- Substring = A piece of text within a larger piece of text

To find where a substring exists (if it does at all) within a larger series of strings you need to call pd.Series.str.find()

Pandas find returns an integer of the location (number of characters from the left) of a substring. It will return -1 if it does not exist

Find has two important arguments that go along with the function. Start & End

- Start (default = 0): Where you want .find() to start looking for your substring. By default you’ll start at the beginning of the string (location 0).

- End: Where you want .find() to finish looking for your substring.

Note: You would only use start & end if you didn’t want to search the entire string.

Check out more Pandas functions on our Pandas Page

Example

str.contains() method can be used to check if a pattern occurs in each string of a Series. str.startswith() and str.endswith() methods can also be used as more specialized versions.

In [1]: animals = pd.Series(['cat', 'dog', 'bear', 'cow', 'bird', 'owl', 'rabbit', 'snake'])

Check if strings contain the letter ‘a’:

In [2]: animals.str.contains('a')

Out[2]:

0 True

1 False

2 True

3 False

4 False

5 False

6 True

7 True

8 True

dtype: bool

This can be used as a boolean index to return only the animals containing the letter ‘a’:

In [3]: animals[animals.str.contains('a')]

Out[3]:

0 cat

2 bear

6 rabbit

7 snake

dtype: object

str.startswith and str.endswith methods work similarly, but they also accept tuples as inputs.

In [4]: animals[animals.str.startswith(('b', 'c'))]

# Returns animals starting with 'b' or 'c'

Out[4]:

0 cat

2 bear

3 cow

4 bird

dtype: object